The Era of Context-Aware Prototyping

Stop recreating, start creating.

Technology Stack

About This Project

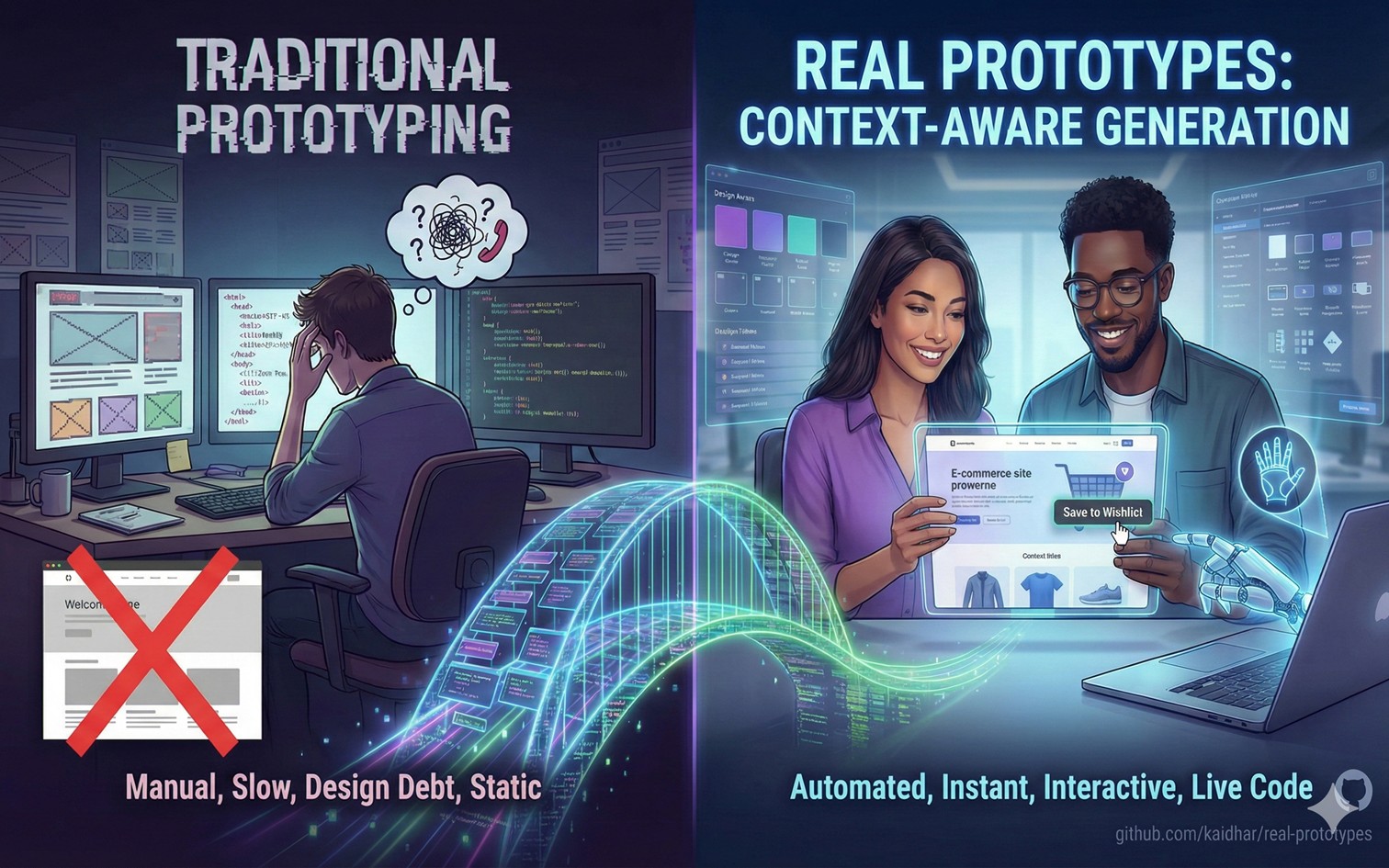

The High Cost of Traditional Prototyping

Building feature prototypes has historically been a game of visual telephone. You start with an idea, but before you can even test the logic, you're stuck spending hours manually recreating the existing platform's styles. It's a massive sink of developer productivity—pixel-matching headers, guessing font weights, and fighting with CSS just to get a "blank canvas" that looks like your actual product.

This "design debt" accumulates, meaning product teams spend 60-70% of their prototyping time on setup rather than the feature itself. When you don't have access to the original Figma files or the source code, this friction transforms from an annoyance into a complete blocker, stifling innovation before it begins.

Bridging the visualization gap for stakeholders

The pain isn't just technical; it's communicative. Product Managers struggle to show stakeholders what a feature would look like in context. Static screenshots with superimposed boxes look amateurish, while generic wireframes fail to convey the "feel" of the integration. This leads to the "visualization gap"—where stakeholders reject good ideas simply because the prototype felt "off" or didn't match the platform's brand identity.

We lose critical feedback cycles because we're showing abstract approximations rather than concrete realities. To bridge this, we need a way to instantly generate high-fidelity environments that stakeholders recognize and trust immediately.

Introducing "Real Prototypes": Context-Aware Generation

Automated platform capture and token extraction

"Real Prototypes" fundamentally changes the workflow by automating the initial friction. Instead of manual recreation, the system uses an intelligent agent to visit your target platform—whether it's a public e-commerce site or an authenticated SaaS dashboard. It captures the visual essence of the site, not by downloading source code, but by extracting "design tokens." It identifies the primary color palette, typography hierarchy, spacing scales, and component structures.

This means you get a local environment that inherits the DNA of the target platform instantly. It's like having a senior designer rebuild the site's shell in seconds, ready for your new feature to be injected.

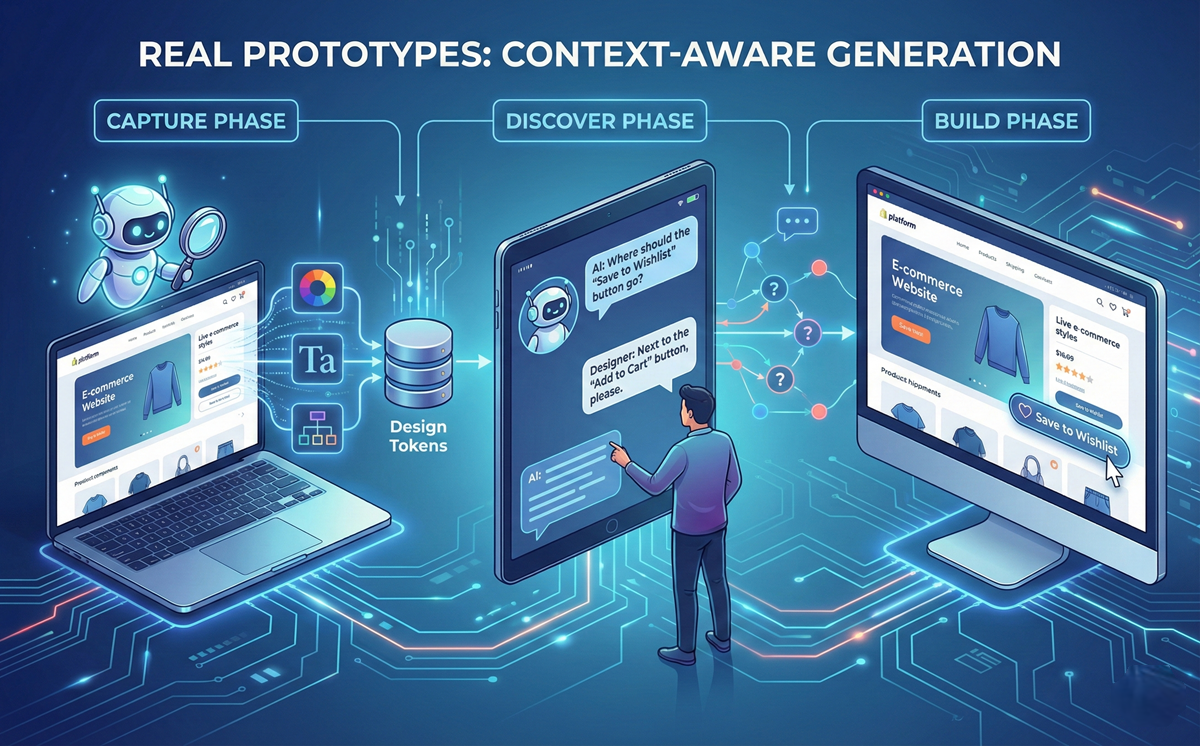

The capture-discover-build engine explained

The magic happens in a three-phase engine: Capture, Discover, and Build. First, the Capture phase secures the visual context. Next, the Discover phase engages you in an interactive dialogue. The AI asks, "Where should this button go?" or "What happens when clicked?" This isn't a static form; it's a dynamic elicitation of requirements. Finally, the Build phase synthesizes these inputs. It combines the captured design tokens with your feature requirements to generate a working prototype.

This context-aware generation ensures that a "Save to Wishlist" button on Amazon looks like an Amazon button, not a generic Bootstrap element. It respects the host organism's design system automatically.

Under the Hood: Architecture and Tech Stack

Leveraging Claude Code and Playwright automation

At its core, this system leverages the synergy between large language models and browser automation. We utilize agent-browser powered by Playwright to navigate the web as a user would. This allows the system to handle complex scenarios, including authenticated sessions where you provide credentials securely.

The AI "sees" the page, identifying DOM elements and computed styles that define the look and feel. It's a sophisticated application of computer vision and DOM analysis, orchestrated by Claude Code to ensure that the extraction is semantic and meaningful, rather than just a raw dump of HTML.

Practical Workflow: From Idea to Feature

Configuring the agent for authenticated environments

One of the standout features is the ability to work behind login screens. Configuration is handled securely via a CLAUDE.md file, keeping credentials local and safe. You simply define the platform URL and necessary login details. When you run the /real-prototypes skill, the agent spins up a secure browser instance, logs in, and navigates to the specific page you want to modify. This opens up use cases for internal tools, admin dashboards, and banking apps where public scrapers fail. The focus on security and local execution makes it viable for enterprise environments where data privacy is paramount.

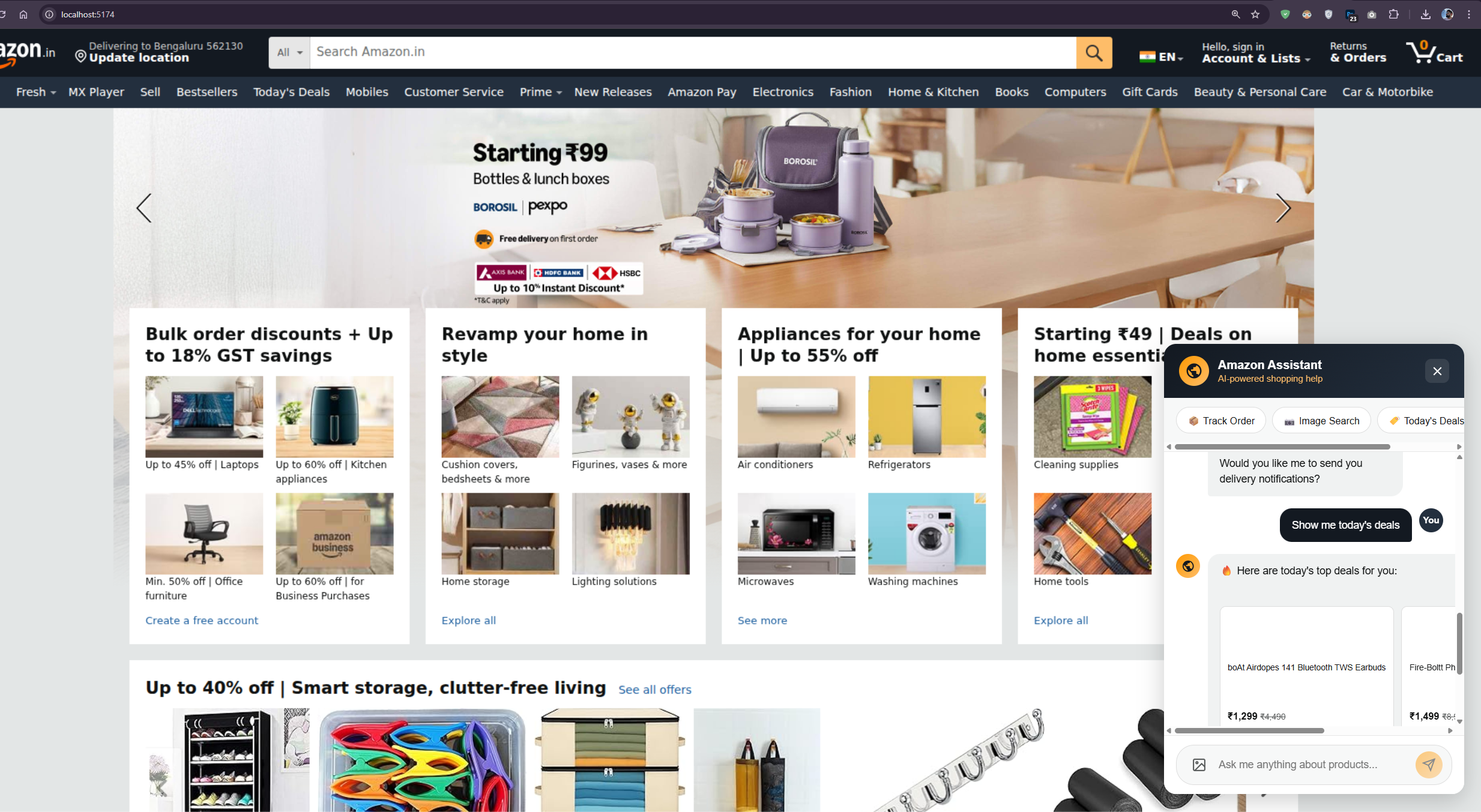

From discovery questions to interactive demo

If you propose a "chatbot" feature like in the image you see here, it asks about the various features. Within minutes of answering these prompts, you are presented with a locally running Next.js application. You can click the buttons, see the hover states, and experience the flow.

It transforms an abstract request into a tangible, interactive demo that you can screen-share in a meeting immediately, reducing the feedback loop from days to minutes.

The Future of Autonomous Web Development

Accelerating the MVP cycle with agentic AI

This tool represents a shift towards "Agentic AI" in web development. We are moving away from AI that just writes code snippets to AI that understands systems. By automating the contextual setup, we drastically accelerate the MVP (Minimum Viable Product) cycle. Startups can test "what if" scenarios on competitor platforms. Agencies can pitch clients with fully branded prototypes in the first meeting. The cost of experimentation drops to near zero. This democratisation of high-fidelity prototyping means we can test more bad ideas quickly to find the good ones, fostering a culture of rapid innovation.

The shift from static mockups to live code

We are witnessing the death of the static mockup for interactive features. Why draw a picture of a button when you can generate the button itself? Real Prototypes pushes the industry toward "Live Code" design. The artifacts we create during the design phase are becoming indistinguishable from the final product. This convergence reduces the translation errors between designers and developers. As these tools evolve to support animations and multi-page flows (as seen on the roadmap), the distinction between "prototyping" and "building" will continue to blur, ushering in a new era of hyper-efficient software delivery.

Conclusion

Real Prototypes isn't just a tool; it's a methodology that respects the scarcity of your time. By solving the "blank canvas" problem through context-aware generation, it empowers Product Managers, Developers, and Designers to focus on the feature, not the framework.

Whether you are proposing a new internal tool or reimagining a competitor's interface, the ability to clone, modify, and demonstrate in minutes is a superpower. As we embrace these agentic workflows, we stop merely recreating the web that exists and start efficiently building the web that comes next.

The friction is gone; now, the only limit is your imagination.

npx command : npx real-prototypes-skill

Github link : https://github.com/kaidhar/real-prototypes